XR Hand tracking: HOME concepts

Project: VR Designer + AR Designer

Role: DevelopeR/Designer

Platforms: Meta quest 2

TECHNOLOGIES: Unity, mac os

FEATURED API'S: oculus integration toolkit (v49), Apple Room plan

Role: DevelopeR/Designer

Platforms: Meta quest 2

TECHNOLOGIES: Unity, mac os

FEATURED API'S: oculus integration toolkit (v49), Apple Room plan

Home design is clearly one of the early standouts of practical XR - especially AR. Placing AR objects in a real space to test size and apparel cue is something that has gotten a lot of use in my household, the last few years. I wanted to brainstorm a couple of quick concepts for home design using VR, and bringing a users real home space in a virtual one.

There are two concepts in this video. Here are the one-line descriptions of each:

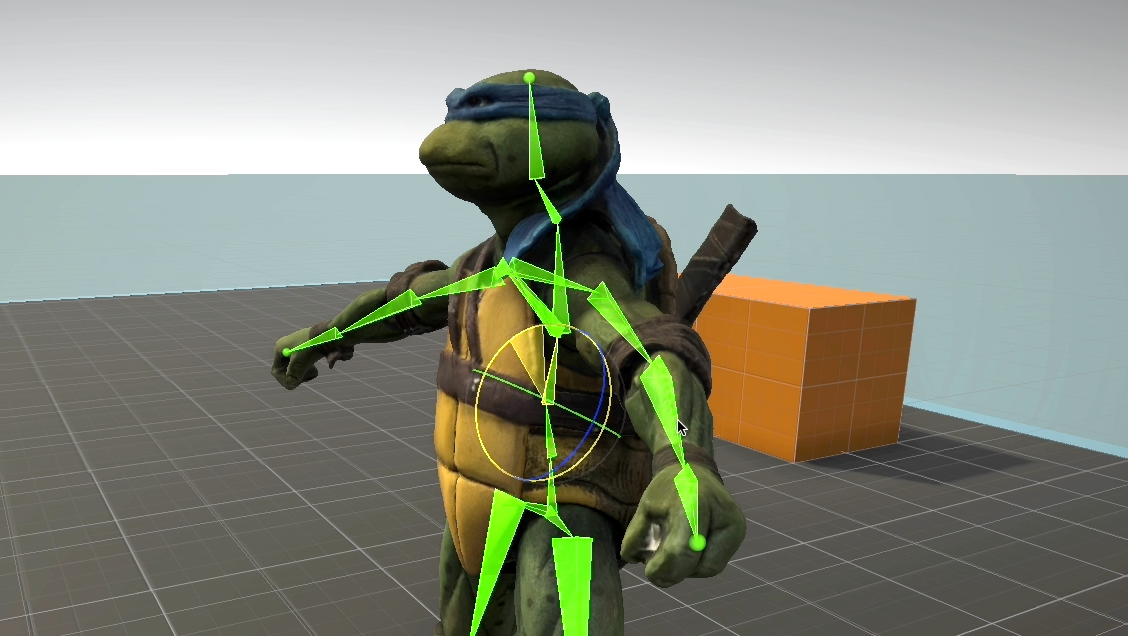

Concept 1: “Bring a real world location into VR - allowing it to be decorated with realistic and natural hand controls”

Concept 2: “Bring a real world location into AR - allowing it to be decorated with realistic and natural hand controls”

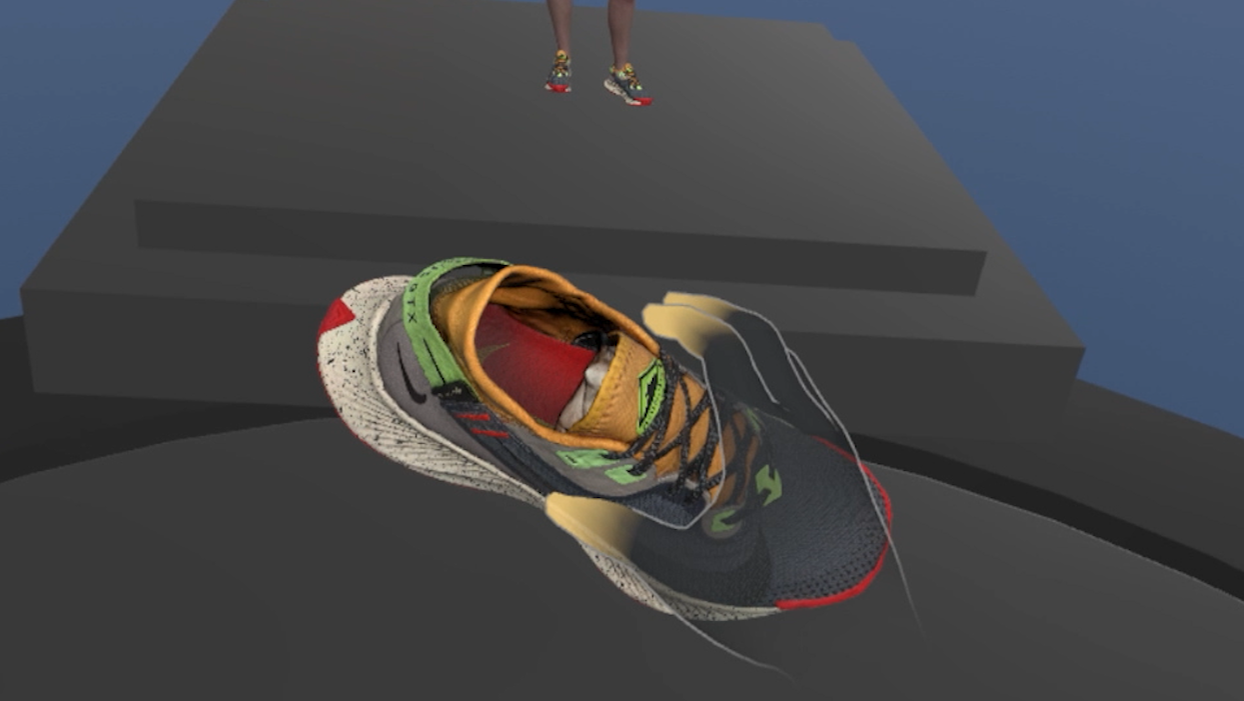

Concept 1 uses the iOS Room Plan API to do a quick room scan of my bedroom, generating a real world scale 3D model. Room Plan intelligently defines walls/windows/doors, but also make guesses as to what’s a bed or dresser. Putting that data through Blender allowed me to remove the furniture objects and adjust normal directions (which allows the walls to be seen through - “doll house style”) This dollhouse VR view lets the users drag around furniture and design the room’s layout. Then a jump into room-scale VR lets the walk around the space, and even further adjust it by pushing or pulling objects.

Concept 2 moves closer to an AR concept by experimenting with the Quest 2’s “Passthrough Mode”. This is a simple black and white video view of the user’s environment (Quest Pro offers a similar color view), but it’s still very effective in overlaying virtual objects on a real surface. In this case, I built a dynamic picture frame that responds and resizes to grab gestures on its four corners. I also pulled images from a basic web server, and used gesture detection (swiping left/right) to step through those images.

Tech Credits: This was all done in Unity (code is C#). The scanning app was built for iPadOS (using Swift. Provided by Apple) 3D model cleanup was done in Blender. And this is all running on an Meta Quest 2, using the Oculus Integration Toolkit v49.